Moving and Obstacle Detection using Ultrasonic Sensors

The content of this page is provided by Nadja Konrad and Mingze Chen. All rights reserved.

Project Documentation

Let a personal robot drive around and explore its environment by only using the ultrasonic sensor to avoid collisions. Student project by Nadja Konrad and Mingze Chen

Preliminary Considerations

We were not supposed to use external hardware (e.g. a tablet) for the task. So first of all we had to figure out, what sensors of the robot we could use to achieve the task. There were two options: The ultrasonic sensor and the Intel RealSense camera. For a visual approach, of course the depth camera would have been the perfect choice. Visual Simultaneous Localization and Mapping (SLAM) could have been a strategy for solving the exploration and mapping problem with the robot. But we decided not to work on SLAM for two reasons.

First of all, in the documentation of the robot, it was stated that the Segway developers were planning on implementing SLAM themselves. We did not like the idea of working on a solution that sooner or later the robot would support anyway.

Secondly, taking into account that the time for the project would be rather limited, implementing SLAM seemed to be too ambitious. So we decided to choose a simpler approach in order to realize the exploration and navigation with the robot. Our idea was to mainly base our strategy on the use of the ultrasonic sensor and perhaps include the depth camera later during the project in order to optimize the exploration and navigation.

Technical Information

The ultrasonic sensor is located at the front of the robot’s body. It has a range between 250 and 1500 millimeters with an angle beam of 40° . Because of the angle beam, the robot occasionally classifies objects on its side as obstacles, although it could walk past them. If the distance of an obstacle in front of Loomo is greater than 1500 millimeters the function getDistance() still returns 1500.

For controlling the robot there are two control modes available: Control mode RAW and NAVIGATION. In control mode RAW, one can directly set the linear and angular velocity of the robot with the functions setLinearVelocity(float linearVelocity) and setAngularVelocity(float angularVelocity). In control mode NAVIGATION the robot is controlled by setting checkpoints with the functions addCheckPoint(float x, float y) or addCheckPoint(float x, float y, float theta). Theta is only necessary when the robot is supposed to perform a rotation. Those checkpoints are then visited by the robot.

Examples for setting a checkpoint:

- addCheckPoint(1, 0) makes the robot walk 1 meter forward

- addCheckPoint(0, 1) makes the robot walk 1 meter to the left

- addCheckPoint(0, 0, (float) (Math.PI / 2)) makes the robot perform a 90° rotation to the left

- addCheckPoint(0, 0, (float) -(Math.PI / 2)) makes the robot perform a 90° rotation to the right

Before setting a checkpoint, the origin of the robot’s internal coordinate system can be set by calling the following functions cleanOriginalPoint() and setOriginalPoint(getOdometryPose(-1)) in this order. If -1 is passed to the second function, the origin is set to the latest known position.

Control mode NAVIGATION has the advantage that in this mode the automatic obstacle avoidance is available. By calling setUltrasonicObstacleAvoidanceEnabled(true) and setUltrasonicObstacleAvoidanceDistance(float meters) the automatic obstacle avoidance can be activated and configured. The robot will then stop automatically when an obstacle is detected within the specified distance. Associated with the automatic obstacle avoidance is the ObstacleStateChangedListener. This listener is called when an obstacle appears or disappears. This makes it easy to define what the robot is supposed to do in case an obstacle appears. The ObstacleStateChangedListener can only be used in control mode NAVIGATION.

Because of the advantages of control mode NAVIGATION over control mode RAW, we decided to use control mode NAVIGATION for controlling the robot. Switching between the two control modes is not recommended because it either is not possible or it causes various problems. For example, it is not possible to switch from control mode NAVIGATION to control mode RAW within the ObstacleStateChangedListener, as the listener is not available in control mode RAW. When switching from control mode NAVIGATION to control mode RAW, the automatic obstacle avoidance stops working and the robot will collide with obstacles.

Another crucial part of control mode NAVIGATION is the CheckPointStateListener. This listener is called when the robot reaches a previously set checkpoint. In our program the CheckPointStateListener is used to set the next checkpoint. Depending on the current state of the robot different calculations take place and different checkpoints are set.

Control mode NAVIGATION has a disadvantage: It is not possible to make the robot walk directly backwards. To achieve this the robot has to perform a 180° rotation, then walk forward and then perform another 180° rotation to face the previous direction. In control mode RAW it is possible to directly make the robot move backwards.

For serializing and deserializing data we use Gson (https://github.com/ google/gson). Gson is a Java library that can be used to convert Java objects into their JSON representation. It can also be used to convert a JSON string to an equivalent Java object. In our program Gson is used to serialize and deserialize the LinkedList that contains all the position objects in order to store it in and retrieve it from a JSON file. The list is stored as a whole instead of storing the single positions. This reduces the read and write accesses to a minimum. And by storing and retrieving the list as a whole it is assured that the order of the positions stays intact.

Design Decisions

Data Structure for Storing Location Related Data

For storing data about a position that the robot reaches during the exploration process, position objects are used. Those objects hold information about the x and the y coordinates of a position as well as the orientation that the robot had at this position. Orientation means the direction of movement relative to the starting point. At the starting point the direction of movement (i.e. orientation) is forward.

For collecting all the positions that the robot reaches during the exploration, a LinkedList is used. LinkedList was chosen because it keeps the positions in the right order. So the first position that the robot reaches is the first element in the list and the last position that the robot reaches is the last element in the list. Keeping the order of the positions intact can be helpful for solving the task of navigation.

Coordinate System

For calculating the x and y coordinates for the positions, there needs to be a coordinate system to reference. Loomo’s coordinate system looks as follows: The positive x-axis is directed to the front of the robot and the positive y-axis is directed to the left of the robot. For calculating the coordinates of the positions, we are working with a coordinate system that equals Loomo’s coordinate system.

Therefore, the coordinates can be calculated as follows:

- When the robot moves forward, the x coordinate is incremented.

- When the robot moves backward, the x coordinate is decremented.

- When the robot moves left, the y coordinate is incremented.

- When the robot moves right, the y coordinate is decremented.

The x and y coordinates are held globally and updated when the robot reaches a checkpoint or an obstacle.

In the Base SDK for Loomo the function getOdometryPose(long time) is provided. By passing -1 to that function, one can get the latest pose of the robot. The problem with the returned poses is, that their x and y coordinates are not intuitively understandable. For example if the robot moves forward by 1 meter, one would expect that the x coordinate increases by 1. But apparently that is not what happens. It is not clear how the coordinates of Loomo’s poses are calculated internally. Therefore, it was not possible to find a way to use the coordinates of those poses to add new checkpoints. It was necessary to define our own x and y coordinates and work with those instead of Loomo’s internally calculated coordinates.

Orientation of the Robot

In order to be able to calculate the coordinates correctly, it is necessary to know in which direction the robot is moving. Therefore, a global variable was defined to keep this information. The orientation of the robot is updated accordingly whenever the robot performs a rotation. The different orientations are: FORWARD, BACKWARD, LEFT and RIGHT (see class Orientation.java).

State of the Robot

In order to control the sequence of movements of the robot, it is necessary to determine what the robot should do next whenever a certain event occurs. For this purpose different states were defined (see class State.java). The current state of the robot influences how the next checkpoint is set. This is achieved by checking the current state of the robot whenever a previously set checkpoint is reached or an obstacle is detected and reacting accordingly. This check is performed when the CheckPointStateListener or the ObstacleStateChangedListener is called.

The different states that the robot can reach are the following:

- START: The robot is in the initial phase of the exploration process. No obstacle has appeared yet.

- WALKING: The robot is walking forward to the next checkpoint.

- CHECKING WALL: The robot has just performed a right turn in order to check if the wall next to it has ended.

- OBSTACLE DETECTED: The robot detected an obstacle and has just performed a turn in order to walk around the obstacle.

- CORNER LEFT: The robot detected a corner during the wall check and has just turned left to start walking around the corner.

- CORNER FORWARD: The robot has just walked a bit further in order to avoid getting stuck at the corner with its right wheel.

- CORNER RIGHT: The robot has just turned right in order to pass the corner.

- CORNER DONE: The robot hast just passed the corner and is now following the new wall.

It is possible to add more states to be able to react to more events.

Description of the Program Components

The components for the exploration program can be found in the package ”navigationrobot”. The package consists of the following classes:

- The MainActivity class is the entry point of the program. This Activity holds the buttons for starting and stopping the exploration process.

- The State class is an enumeration of all the possibles states that the robot can reach. Those states are necessary for calculating the current coordinates of the robot and determining the next checkpoint to be set.

- The Orientation class is an enumeration of the different directions of movement. The orientation is relevant for calculating the current coordinates of the robot.

- The Position class represents a position that the robot reaches during the exploration. The position objects consist of the x and y coordinates as well as the current orientation of the robot.

- The StorageHelper class provides functions for storing and retrieving data.

- The Exploration class handles the actual exploration process. This class provides the functions for starting and stopping the exploration, for calculating coordinates, for handling obstacle appearances and setting checkpoints.

- The MapActivity class holds the layout for displaying the map (i.e. the motion path of the robot during the exploration process).

- The MapView class is responsible for actually drawing the map on the screen.

Further information about the different classes and their functions is provided in the detailed comments in the code of the respective classes.

Description of the Exploration Process

Important Parameters

The obstacle avoidance distance that is used in the program is 1 meter. The default walking distance is 0.5 meters. This means that the robot performs a wall check after every 0.5 meters. The walking distance for walking around a corner is 1.25 meters. For reaching the new wall, the robot has to walk 1 meter in order to bypass the obstacle avoidance distance to the previous wall. The extra 0.25 meters make sure that the robot really reaches the new wall. The threshold for increasing the robots distance to the wall is 0.8 meters. If the distance falls below this threshold, the robots movements need to be adjusted in order to increase the distance again and avoid the robot from getting stuck at the wall.

Basic Flow

- The robot moves forward until it reaches the first obstacle.

- The robot performs a 90° rotation to the left whenever it reaches an obstacle and then walks forward until it reaches the next obstacle.

- On the way from one obstacle to the next the robot regularly performs a 90° rotation to the right in order to check if the wall to its right has ended.

- If the wall has not ended, the robot turns back and keeps walking forward along the wall in order to reach the next obstacle.

- Step 3b: If the wall has ended, that means that there is a corner and the robot walks around the corner.

The basic principle is that whenever the robot reaches a previously set checkpoint or detects an obstacle, a new checkpoint is set so that the robot keeps moving. The setting of a new checkpoint happens either in the CheckPointStateListener or the ObstacleStateChangedListener.

Start of the Exploration

In the beginning of the exploration process, the state of the robot is set to START and the orientation is set to FORWARD. The automatic obstacle avoidance is enabled and the first checkpoints are set. In this phase, when the robot reaches a checkpoint, the next checkpoint is set to keep the robot walking forward until it reaches the first obstacle.

Obstacles

Whenever the robot detects an obstacle it stops because of the automatic obstacle avoidance. It then performs a 90° rotation to the left in order to start walking along the obstacle (i.e. wall) and reach the next obstacle.

Wall Check

The ultrasonic sensor is located at the front of the robot’s body. Therefore, when the robot is walking along a wall to the next obstacle, it cannot detect when the wall to it’s right ends. This would lead to the robot ignoring parts of a room during exploration. To solve this problem a regular wall check is performed. This means that every 0.5 meters the robot stops and performs a 90° rotation to the right to check if there still is an obstacle. If there is an obstacle, the robot turns back around and keeps walking along the obstacle. If no obstacle is detected during the wall check, that means that the wall next to the robot has ended and the robot needs to walk around the corner to keep following the wall.

Walking Around Corners

It is not recommended to make the robot walk around the corner as soon as it detects that the wall next to it has ended. This often leads to the robot getting stuck at the corner with its right wheel. To avoid this, the following approach is applied:

- The robot detects that the wall has ended.

- The robot performs a 90° rotation to the left so that it faces the previous direction.

- The robot walks 0.5 meters forward.

- The robot performs a 90° rotation to the right.

- The robot walks 1.25 meters forward.

- The robot performs a 90° rotation to the right and now faces the new wall.

- The robot approaches the new wall and continues with its regular behavior of following the wall.

In step 5 the robot walks 1.25 meters forward to make sure that it reaches the new wall. It has to walk 1 meter to bypass the obstacle avoidance distance of 1 meter. The extra 0.25 meters are meant to compensate possible inaccuracies in the robots movements. For example it is possible that when following the previous wall, the robot has increased its distance to the wall due to imprecise movements. In that case it is not enough to make the robot walk just 1 meter in order to pass the corner. Because when trying to approach the new wall the ultrasonic sensor might not be able to detect an obstacle because the robot in fact is not standing in front of the wall yet, but a few centimeters aside.

Keeping Distance to the Wall

Due to imprecise movements of the robot it might happen that it gets closer to the wall over time. This can lead to the robot getting stuck at the wall. To avoid this, the following approach is applied:

When the robot performs the regular wall check, the ultrasonic distance is checked. If the distance falls below the threshold of 0.8 meters, the robot’s movements are corrected in order to avoid it getting stuck at the wall. Therefore, the next checkpoint is set to make the robot walk forward and slightly left.

During the duration of the project, the robot always had a slight right twist, which is why we chose this approach. If the circumstances change, for example by inflating the robot’s tires, the right twist might disappear or get stronger or the robot might develop a left twist instead. In that case the threshold and the correction value need to be adjusted to the new characteristics of the robot.

Another obvious approach to increase the distance to the wall would be to make the robot walk backward. This does not work because in control mode NAVIGATION it is not possible to set a checkpoint so that the robot directly walks backward. Instead it would be necessary to perform a 180° rotation, then walk forward and then rotate back. Obviously this would lead to an even more inaccurate path of movement because with every rotation the inaccuracies of the robot’s movements accumulate.

In control mode RAW it is possible to make the robot directly walk backward. But this approach does not work either because the setting of new checkpoints in our program happens in the CheckPointStateListener. This listener only works in control mode NAVIGATION and not in control mode RAW. Therefore, it is not possible to switch to control mode RAW within the listener.

If the robot moves further away from the wall than the specified obstacle avoidance distance of 1 meter, it walks towards the wall when performing the wall check.

Brief summary of the approach: If the robot moves closer to the wall than 0.8 meters, the distance is increased by making the robot move forward and slightly left. If the distance is between 0.8 and 1 meter, no correction is necessary and the robot walks forward (with its usual right twist).If the robot moves further away from the wall than the specified obstacle avoidance distance of 1 meter, it walks towards the wall when performing the wall check.

Obstacle Detection: False Positives

Occasionally, it happens that the ultrasonic sensor detects an obstacle where in fact there is none. To avoid unwanted behavior and stop the robot from performing a rotation to the left when there is no obstacle, the following approach is applied: At the beginning of the ObstacleStateChangedListener a delay of 300 milliseconds is set. After the delay, the ultrasonic distance is checked. If the distance is less than the defined obstacle avoidance distance of 1 meter, that means that there really is an obstacle in front of the robot. In that case the robot turns left and proceeds with walking along the obstacle. If the distance is greater than the specified obstacle avoidance, that means that there is no obstacle in front of the robot. In that case nothing happens and the robot proceeds with its previous movement.

Updating Orientation

Whenever the robot performs a rotation its orientation needs to be updated accordingly:

- FORWARD – Left rotation – LEFT

- LEFT – Left rotation – BACKWARD

- BACKWARD – Left rotation – RIGHT

- RIGHT – Left rotation – FORWARD

- FORWARD – Right rotation – RIGHT

- RIGHT – Right rotation – BACKWARD

- BACKWARD – Right rotation – LEFT

- LEFT – Right rotation – FORWARD

For further details see function updateOrientation(String direction) in class Exploration.java.

Updating Coordinates and Storing Positions

The x and y coordinates are updated when the robot reaches a checkpoint or when an obstacle appears. The calculation of the new coordinates depends on the orientation of the robot:

- FORWARD: The x coordinate is incremented.

- BACKWARD: The x coordinate is decremented.

- LEFT: The y coordinate is incremented.

- RIGHT: The y coordinate is decremented.

In case the robot reaches a checkpoint, the travelled distance can easily be added to or subtracted from the current value of the x or y coordinate. The travelled distance is either 0.5 meters (default walking distance) or 1.25 meters in case the robot has passed a corner.

In case of an obstacle calculations are a bit more di”cult. At every checkpoint the current ultrasonic distance to the front of the robot is saved. If the robot detects an obstacle on its way to the next checkpoint, the ultrasonic distance at this position can be subtracted from the last saved value. The resulting value is equivalent to the travelled distance from the last checkpoint to the current position where the obstacle appeared. This value can then be added to or subtracted from the current value of the x or y coordinate.

When the coordinates are updated, a corresponding position object (see class Position.java) with the current x and y coordinates and orientation is created. This position is added to the LinkedList that holds all the positions that have been reached so far.

For further details, see function updateCoordinates() in class Exploration.java.

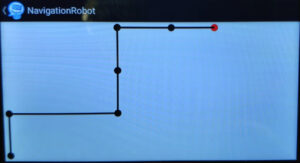

Example of an Exploration

Basic Map

I implemented a very basic map in order to be able to see if the exploration works as it is supposed to. I will not go into detail about this map here because my map is not the one that was supposed to be used in the final application. Mingze was working on the map but at the time I needed it for debugging and testing, his version was not finished yet. Apart from that we needed a basic map in order to be able to go on with working on the navigation part of our task. Therefore, we used my map as a temporary solution until Mingze would be finished with his map. It was not until later in the project that we decided, together with David, that we would not implement the navigation part.

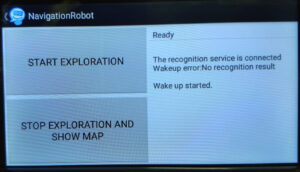

User Interface

The main screen of the application consists of two buttons and a text field. The text field show information about the voice service. One button is for starting the exploration and the other is for stopping the exploration. The button for stopping the exploration will cause the LinkedList containing all the position objects being stored in a file. Afterwards a new screen appears that shows the motion path of the previous exploration. This new screen only appears if a file is already present in the storage. Otherwise the user is prompted to run the exploration first before being able to see a map.

Problems and Limitations

The exploration with the described strategy works under certain circumstances:

- The exploration will only work in rooms with right-angled corners. This is due to the fact that the robot cannot flexibly adapt to other shapes as its behavior is predetermined. For example, the robot is told to perform a 90° rotation to the left whenever an obstacle occurs, no matter what the circumstances of the given room are.

- Tables, chairs or low objects are problematic as they cannot be detected by the robot reliably. For example, if the ultrasonic sensor does not exactly face the table leg or the tabletop or if there is a very low object in front of the robot, the ultrasonic sensor will not be able to detect the obstacle. In those cases the robot might get stuck.

- The room should not be too small. The defined obstacle avoidance distance is 1 meter, which might be a problem in very small rooms.

The described exploration strategy has different problems and limitations:

- The strategy only allows to explore the outline of a room and ignores the center.

- The exploration is quite slow. The constant rotations and the double check of the ultrasonic distance to prevent false positives in obstacle recognition take a lot of time.

- The robot currently has a slight right twist. If the tires of the robot are inflated, this right twist might get stronger, disappear or become a left twist. In this case the correction value for keeping the expected distance to the wall needs to be adjusted.

- When the robot reaches a checkpoint, the x and y coordinates are updated. This happens with fixed values. Assuming that the robot is supposed to walk 0.5 meter forward, when it reaches the checkpoint the x coordinate is raised by 0.5 (default walking distance). This does not necessarily mean that the robot really moved 0.5 meters forward. Due to inaccuracies in the robot’s movements it is possible that the robot might have moved 0.6 meters or 0.4 meters. But the x coordinate will be raised by 0.5 in every case. Also, if the robot performs a wrong rotation during the exploration, all the following coordinates will be calculated incorrectly because the actual orientation of the robot does not match the calculated orientation. Those problems occur because the calculations work with the expected status and not with the actual status of the robot. The program can not react flexibly to wrong movements of the robot.

The current version of the application also has some issues:

- Sometimes the start button has to be pushed multiple times before the robot actually starts moving. This also applies to the voice command for starting the exploration. The reason for this is unknown.

- Occasionally the robot shows unexpected behavior. For example, sometimes the robot turns right instead of left when an obstacle appears. There is no explanation for this behavior. It does seem to be a problem with the robot itself rather than the code because this problem usually disappears after restarting the robot.

- Sometimes the robot stops walking completely or it gets stuck in repeated left and right rotations. The cause of this problem is unclear and could not have been found yet.

- Repeated exploration does not work. After showing the map, going back to the main screen of the application and starting the exploration again, the exploration stops after the first obstacle is detected. The robot then repeatedly performs left and right rotations and does not go on with the exploration. The reason for this is not known.

Suggested Improvements and Alternative Strategies

A better approach for exploration than using the ultrasonic sensor could be to use the Intel RealSense camera instead. With computer vision knowledge the depth image of the camera could be used to determine the distance to an obstacle and keep the distance while moving the robot. An advantage of the camera over the ultrasonic sensor is that it is placed in the head of the robot rather than the body. Therefore, it would be possible to let the camera face the wall while the base of the robot can stay in the same position parallel to the wall. This would make the regular rotations for the wall check unnecessary and the motion path would probably become more accurate. Apart from that, the exploration would take less time. Using the camera instead of the ultrasonic sensor would also have the advantage that control mode RAW could be used instead of control mode NAVIGATION. This allows to make the robot directly move backwards if necessary. By using the RealSense camera instead of the ultrasonic sensor, the exploration could probably be designed in a much more flexible way so that the robot can adapt to changing circumstances in the environment.

Future Work

To make it possible to explore a complete room successfully with the described strategy, the above mentioned problems need to be solved. The center of the room needs to be explored as well as the outline. The strategy should be adapted to be able to react flexibly to changing circumstances in the environment. The positions are stored persistently, so that a room does not need to be explored repeatedly, unless something changes. Theoretically it is possible to use the stored positions to create a map and start navigating based on this map. But in order to achieve this, there needs to be a way to tell the robot where in the map it is located at the beginning of navigation. Navigation is not implemented at all at the moment, so this task still needs to be solved in the future.

But as was said before, it might be better to completely refrain from using the ultrasonic sensor and instead use the Intel RealSense camera to solve the task of exploration and navigation.